Classes | |

| struct | cuda_submit_charges_args |

Public Member Functions | |

| ComputePmeMgr () | |

| ~ComputePmeMgr () | |

| void | initialize (CkQdMsg *) |

| void | initialize_pencils (CkQdMsg *) |

| void | activate_pencils (CkQdMsg *) |

| void | recvArrays (CProxy_PmeXPencil, CProxy_PmeYPencil, CProxy_PmeZPencil) |

| void | initialize_computes () |

| void | sendData (Lattice &, int sequence) |

| void | sendDataPart (int first, int last, Lattice &, int sequence, int sourcepe, int errors) |

| void | sendPencils (Lattice &, int sequence) |

| void | sendPencilsPart (int first, int last, Lattice &, int sequence, int sourcepe) |

| void | recvGrid (PmeGridMsg *) |

| void | gridCalc1 (void) |

| void | sendTransBarrier (void) |

| void | sendTransSubset (int first, int last) |

| void | sendTrans (void) |

| void | fwdSharedTrans (PmeTransMsg *) |

| void | recvSharedTrans (PmeSharedTransMsg *) |

| void | sendDataHelper (int) |

| void | sendPencilsHelper (int) |

| void | recvTrans (PmeTransMsg *) |

| void | procTrans (PmeTransMsg *) |

| void | gridCalc2 (void) |

| void | gridCalc2R (void) |

| void | fwdSharedUntrans (PmeUntransMsg *) |

| void | recvSharedUntrans (PmeSharedUntransMsg *) |

| void | sendUntrans (void) |

| void | sendUntransSubset (int first, int last) |

| void | recvUntrans (PmeUntransMsg *) |

| void | procUntrans (PmeUntransMsg *) |

| void | gridCalc3 (void) |

| void | sendUngrid (void) |

| void | sendUngridSubset (int first, int last) |

| void | recvUngrid (PmeGridMsg *) |

| void | recvAck (PmeAckMsg *) |

| void | copyResults (PmeGridMsg *) |

| void | copyPencils (PmeGridMsg *) |

| void | ungridCalc (void) |

| void | recvRecipEvir (PmeEvirMsg *) |

| void | addRecipEvirClient (void) |

| void | submitReductions () |

| void | chargeGridSubmitted (Lattice &lattice, int sequence) |

| void | cuda_submit_charges (Lattice &lattice, int sequence) |

| void | sendChargeGridReady () |

| void | pollChargeGridReady () |

| void | pollForcesReady () |

| void | recvChargeGridReady () |

| void | chargeGridReady (Lattice &lattice, int sequence) |

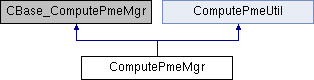

Public Member Functions inherited from ComputePmeUtil Public Member Functions inherited from ComputePmeUtil | |

| ComputePmeUtil () | |

| ~ComputePmeUtil () | |

Public Attributes | |

| Lattice * | sendDataHelper_lattice |

| int | sendDataHelper_sequence |

| int | sendDataHelper_sourcepe |

| int | sendDataHelper_errors |

| CmiNodeLock | pmemgr_lock |

| float * | a_data_host |

| float * | a_data_dev |

| float * | f_data_host |

| float * | f_data_dev |

| int | cuda_atoms_count |

| int | cuda_atoms_alloc |

| cudaEvent_t | end_charges |

| cudaEvent_t * | end_forces |

| int | forces_count |

| int | forces_done_count |

| double | charges_time |

| double | forces_time |

| int | check_charges_count |

| int | check_forces_count |

| int | master_pe |

| int | this_pe |

| int | chargeGridSubmittedCount |

| Lattice * | saved_lattice |

| int | saved_sequence |

| ResizeArray< ComputePme * > | pmeComputes |

Static Public Attributes | |

| static CmiNodeLock | fftw_plan_lock |

| static CmiNodeLock | cuda_lock |

| static std::deque< cuda_submit_charges_args > | cuda_submit_charges_deque |

| static bool | cuda_busy |

Static Public Attributes inherited from ComputePmeUtil Static Public Attributes inherited from ComputePmeUtil | |

| static int | numGrids |

| static Bool | alchOn |

| static Bool | alchFepOn |

| static Bool | alchThermIntOn |

| static Bool | alchDecouple |

| static BigReal | alchElecLambdaStart |

| static Bool | lesOn |

| static int | lesFactor |

| static Bool | pairOn |

| static Bool | selfOn |

| static Bool | LJPMEOn |

Friends | |

| class | ComputePme |

| class | NodePmeMgr |

Additional Inherited Members | |

Static Public Member Functions inherited from ComputePmeUtil Static Public Member Functions inherited from ComputePmeUtil | |

| static void | select (void) |

Detailed Description

Definition at line 383 of file ComputePme.C.

Constructor & Destructor Documentation

◆ ComputePmeMgr()

| ComputePmeMgr::ComputePmeMgr | ( | ) |

Definition at line 738 of file ComputePme.C.

References chargeGridSubmittedCount, check_charges_count, check_forces_count, cuda_atoms_alloc, cuda_atoms_count, cuda_errcheck(), CUDA_EVENT_ID_PME_CHARGES, CUDA_EVENT_ID_PME_COPY, CUDA_EVENT_ID_PME_FORCES, CUDA_EVENT_ID_PME_KERNEL, CUDA_EVENT_ID_PME_TICK, cuda_lock, CUDA_STREAM_CREATE, end_charges, end_forces, fftw_plan_lock, NUM_STREAMS, pmemgr_lock, and this_pe.

◆ ~ComputePmeMgr()

| ComputePmeMgr::~ComputePmeMgr | ( | ) |

Member Function Documentation

◆ activate_pencils()

| void ComputePmeMgr::activate_pencils | ( | CkQdMsg * | msg | ) |

Definition at line 1816 of file ComputePme.C.

◆ addRecipEvirClient()

| void ComputePmeMgr::addRecipEvirClient | ( | void | ) |

Definition at line 3064 of file ComputePme.C.

◆ chargeGridReady()

| void ComputePmeMgr::chargeGridReady | ( | Lattice & | lattice, |

| int | sequence | ||

| ) |

Definition at line 3626 of file ComputePme.C.

References PmeGrid::K3, NAMD_bug(), PmeGrid::order, pmeComputes, sendData(), sendPencils(), and ResizeArray< Elem >::size().

Referenced by ComputePme::doWork(), and recvChargeGridReady().

◆ chargeGridSubmitted()

| void ComputePmeMgr::chargeGridSubmitted | ( | Lattice & | lattice, |

| int | sequence | ||

| ) |

Definition at line 3567 of file ComputePme.C.

References chargeGridSubmittedCount, CUDA_EVENT_ID_PME_COPY, end_charges, master_pe, Node::Object(), saved_lattice, saved_sequence, Node::simParameters, and simParams.

Referenced by cuda_submit_charges().

◆ copyPencils()

| void ComputePmeMgr::copyPencils | ( | PmeGridMsg * | msg | ) |

Definition at line 3872 of file ComputePme.C.

References PmeGrid::block1, PmeGrid::block2, PmeGrid::dim2, PmeGrid::dim3, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, ComputePmeUtil::numGrids, PmeGrid::order, PmeGridMsg::qgrid, PmeGridMsg::sourceNode, PmeGridMsg::zlist, and PmeGridMsg::zlistlen.

Referenced by recvUngrid().

◆ copyResults()

| void ComputePmeMgr::copyResults | ( | PmeGridMsg * | msg | ) |

Definition at line 4064 of file ComputePme.C.

References PmeGrid::dim3, PmeGridMsg::fgrid, PmeGrid::K3, PmeGridMsg::len, ComputePmeUtil::numGrids, PmeGrid::order, PmeGridMsg::qgrid, PmeGridMsg::start, PmeGridMsg::zlist, and PmeGridMsg::zlistlen.

Referenced by recvUngrid().

◆ cuda_submit_charges()

| void ComputePmeMgr::cuda_submit_charges | ( | Lattice & | lattice, |

| int | sequence | ||

| ) |

Definition at line 3512 of file ComputePme.C.

References a_data_dev, a_data_host, chargeGridSubmitted(), charges_time, cuda_atoms_count, CUDA_EVENT_ID_PME_COPY, CUDA_EVENT_ID_PME_KERNEL, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, and PmeGrid::order.

Referenced by ComputePme::doWork().

◆ fwdSharedTrans()

| void ComputePmeMgr::fwdSharedTrans | ( | PmeTransMsg * | msg | ) |

Definition at line 2042 of file ComputePme.C.

References PmeSharedTransMsg::count, PmeSharedTransMsg::lock, PmeSharedTransMsg::msg, NodePmeInfo::npe, NodePmeInfo::pe_start, PME_TRANS_PRIORITY, PRIORITY_SIZE, PmeTransMsg::sequence, and SET_PRIORITY.

Referenced by sendTransSubset().

◆ fwdSharedUntrans()

| void ComputePmeMgr::fwdSharedUntrans | ( | PmeUntransMsg * | msg | ) |

Definition at line 2305 of file ComputePme.C.

References PmeSharedUntransMsg::count, PmeSharedUntransMsg::lock, PmeSharedUntransMsg::msg, NodePmeInfo::npe, and NodePmeInfo::pe_start.

Referenced by sendUntransSubset().

◆ gridCalc1()

| void ComputePmeMgr::gridCalc1 | ( | void | ) |

Definition at line 1934 of file ComputePme.C.

References PmeGrid::dim2, PmeGrid::dim3, and ComputePmeUtil::numGrids.

◆ gridCalc2()

| void ComputePmeMgr::gridCalc2 | ( | void | ) |

Definition at line 2110 of file ComputePme.C.

References PmeGrid::dim3, gridCalc2R(), ComputePmeUtil::numGrids, LocalPmeInfo::ny_after_transpose, and simParams.

◆ gridCalc2R()

| void ComputePmeMgr::gridCalc2R | ( | void | ) |

Definition at line 2170 of file ComputePme.C.

References CKLOOP_CTRL_PME_KSPACE, PmeKSpace::compute_energy(), PmeKSpace::compute_energy_LJPME(), PmeGrid::dim3, ComputeNonbondedUtil::ewaldcof, ComputeNonbondedUtil::LJewaldcof, ComputePmeUtil::LJPMEOn, ComputePmeUtil::numGrids, LocalPmeInfo::ny_after_transpose, and Node::Object().

Referenced by gridCalc2().

◆ gridCalc3()

| void ComputePmeMgr::gridCalc3 | ( | void | ) |

Definition at line 2379 of file ComputePme.C.

References PmeGrid::dim2, PmeGrid::dim3, and ComputePmeUtil::numGrids.

◆ initialize()

| void ComputePmeMgr::initialize | ( | CkQdMsg * | msg | ) |

Definition at line 890 of file ComputePme.C.

References Lattice::a(), Lattice::a_r(), ResizeArray< Elem >::add(), ResizeArray< Elem >::begin(), PmeGrid::block1, PmeGrid::block2, PmeGrid::block3, cuda_errcheck(), deviceCUDA, PmeGrid::dim2, PmeGrid::dim3, ResizeArray< Elem >::end(), endi(), fftw_plan_lock, findRecipEvirPe(), generatePmePeList2(), DeviceCUDA::getDeviceID(), PmePencilInitMsgData::grid, iINFO(), iout, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, PatchMap::max_a(), PatchMap::min_a(), NAMD_bug(), NAMD_die(), PatchMap::node(), NodePmeInfo::npe, ComputePmeUtil::numGrids, PatchMap::numNodesWithPatches(), PatchMap::numPatches(), PatchMap::numPatchesOnNode(), LocalPmeInfo::nx, LocalPmeInfo::ny_after_transpose, PatchMap::Object(), Node::Object(), DeviceCUDA::one_device_per_node(), PmeGrid::order, NodePmeInfo::pe_start, WorkDistrib::peDiffuseOrdering, pencilPMEProcessors, PmePencilInitMsgData::pmeNodeProxy, PmePencilInitMsgData::pmeProxy, NodePmeInfo::real_node, Random::reorder(), ResizeArray< Elem >::resize(), Node::simParameters, simParams, ResizeArray< Elem >::size(), SortableResizeArray< Elem >::sort(), WorkDistrib::sortPmePes(), Vector::unit(), LocalPmeInfo::x_start, PmePencilInitMsgData::xBlocks, PmePencilInitMsgData::xm, PmePencilInitMsgData::xPencil, LocalPmeInfo::y_start_after_transpose, PmePencilInitMsgData::yBlocks, PmePencilInitMsgData::ym, PmePencilInitMsgData::yPencil, PmePencilInitMsgData::zBlocks, PmePencilInitMsgData::zm, and PmePencilInitMsgData::zPencil.

◆ initialize_computes()

| void ComputePmeMgr::initialize_computes | ( | ) |

Definition at line 2765 of file ComputePme.C.

References chargeGridSubmittedCount, cuda_errcheck(), cuda_init_bspline_coeffs(), cuda_lock, deviceCUDA, PmeGrid::dim2, PmeGrid::dim3, DeviceCUDA::getDeviceID(), DeviceCUDA::getMasterPe(), ijpair::i, ijpair::j, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, master_pe, NAMD_bug(), ComputePmeUtil::numGrids, PatchMap::numPatchesOnNode(), PatchMap::Object(), Node::Object(), ReductionMgr::Object(), PmeGrid::order, REDUCTIONS_BASIC, Node::simParameters, simParams, ReductionMgr::willSubmit(), and XCOPY.

◆ initialize_pencils()

| void ComputePmeMgr::initialize_pencils | ( | CkQdMsg * | msg | ) |

Definition at line 1721 of file ComputePme.C.

References Lattice::a(), Lattice::a_r(), Lattice::b(), Lattice::b_r(), PmeGrid::block1, PmeGrid::block2, deviceCUDA, DeviceCUDA::getMasterPe(), PmeGrid::K1, PmeGrid::K2, PatchMap::max_a(), PatchMap::max_b(), PatchMap::min_a(), PatchMap::min_b(), PatchMap::node(), PatchMap::numPatches(), PatchMap::Object(), Node::Object(), PmeGrid::order, Random::reorder(), Node::simParameters, simParams, and Vector::unit().

◆ pollChargeGridReady()

| void ComputePmeMgr::pollChargeGridReady | ( | ) |

Definition at line 3613 of file ComputePme.C.

References CcdCallBacksReset(), cuda_check_pme_charges(), CUDA_POLL, and NAMD_bug().

◆ pollForcesReady()

| void ComputePmeMgr::pollForcesReady | ( | ) |

Definition at line 2701 of file ComputePme.C.

References CcdCallBacksReset(), cuda_check_pme_forces(), CUDA_POLL, and NAMD_bug().

◆ procTrans()

| void ComputePmeMgr::procTrans | ( | PmeTransMsg * | msg | ) |

Definition at line 2076 of file ComputePme.C.

References PmeGrid::dim3, PmeTransMsg::lattice, NodePmeInfo::npe, ComputePmeUtil::numGrids, PmeTransMsg::nx, LocalPmeInfo::ny_after_transpose, NodePmeInfo::pe_start, PmeTransMsg::qgrid, PmeTransMsg::sequence, PmeTransMsg::x_start, and LocalPmeInfo::y_start_after_transpose.

Referenced by recvSharedTrans(), and recvTrans().

◆ procUntrans()

| void ComputePmeMgr::procUntrans | ( | PmeUntransMsg * | msg | ) |

Definition at line 2337 of file ComputePme.C.

References PmeGrid::dim3, PmeGrid::K2, NodePmeInfo::npe, ComputePmeUtil::numGrids, LocalPmeInfo::nx, PmeUntransMsg::ny, NodePmeInfo::pe_start, PmeUntransMsg::qgrid, LocalPmeInfo::x_start, and PmeUntransMsg::y_start.

Referenced by recvSharedUntrans(), and recvUntrans().

◆ recvAck()

| void ComputePmeMgr::recvAck | ( | PmeAckMsg * | msg | ) |

Definition at line 2479 of file ComputePme.C.

References cuda_lock, master_pe, and NAMD_bug().

Referenced by recvUngrid().

◆ recvArrays()

| void ComputePmeMgr::recvArrays | ( | CProxy_PmeXPencil | x, |

| CProxy_PmeYPencil | y, | ||

| CProxy_PmeZPencil | z | ||

| ) |

Definition at line 828 of file ComputePme.C.

◆ recvChargeGridReady()

| void ComputePmeMgr::recvChargeGridReady | ( | ) |

Definition at line 3622 of file ComputePme.C.

References chargeGridReady(), saved_lattice, and saved_sequence.

◆ recvGrid()

| void ComputePmeMgr::recvGrid | ( | PmeGridMsg * | msg | ) |

Definition at line 1855 of file ComputePme.C.

References PmeGrid::dim3, PmeGridMsg::fgrid, PmeGridMsg::lattice, NAMD_bug(), ComputePmeUtil::numGrids, PmeGridMsg::qgrid, PmeGridMsg::sequence, PmeGridMsg::zlist, and PmeGridMsg::zlistlen.

◆ recvRecipEvir()

| void ComputePmeMgr::recvRecipEvir | ( | PmeEvirMsg * | msg | ) |

Definition at line 3068 of file ComputePme.C.

References PmeEvirMsg::evir, NAMD_bug(), ComputePmeUtil::numGrids, pmeComputes, ResizeArray< Elem >::size(), and submitReductions().

◆ recvSharedTrans()

| void ComputePmeMgr::recvSharedTrans | ( | PmeSharedTransMsg * | msg | ) |

Definition at line 2058 of file ComputePme.C.

References PmeSharedTransMsg::count, PmeSharedTransMsg::lock, PmeSharedTransMsg::msg, and procTrans().

◆ recvSharedUntrans()

| void ComputePmeMgr::recvSharedUntrans | ( | PmeSharedUntransMsg * | msg | ) |

Definition at line 2319 of file ComputePme.C.

References PmeSharedUntransMsg::count, PmeSharedUntransMsg::lock, PmeSharedUntransMsg::msg, and procUntrans().

◆ recvTrans()

| void ComputePmeMgr::recvTrans | ( | PmeTransMsg * | msg | ) |

◆ recvUngrid()

| void ComputePmeMgr::recvUngrid | ( | PmeGridMsg * | msg | ) |

Definition at line 2464 of file ComputePme.C.

References copyPencils(), copyResults(), NAMD_bug(), and recvAck().

◆ recvUntrans()

| void ComputePmeMgr::recvUntrans | ( | PmeUntransMsg * | msg | ) |

◆ sendChargeGridReady()

| void ComputePmeMgr::sendChargeGridReady | ( | ) |

Definition at line 3599 of file ComputePme.C.

References chargeGridSubmittedCount, master_pe, pmeComputes, and ResizeArray< Elem >::size().

Referenced by cuda_check_pme_charges().

◆ sendData()

| void ComputePmeMgr::sendData | ( | Lattice & | lattice, |

| int | sequence | ||

| ) |

Definition at line 4036 of file ComputePme.C.

References sendDataHelper_errors, sendDataHelper_lattice, sendDataHelper_sequence, sendDataHelper_sourcepe, and sendDataPart().

Referenced by chargeGridReady().

◆ sendDataHelper()

| void ComputePmeMgr::sendDataHelper | ( | int | iter | ) |

◆ sendDataPart()

| void ComputePmeMgr::sendDataPart | ( | int | first, |

| int | last, | ||

| Lattice & | lattice, | ||

| int | sequence, | ||

| int | sourcepe, | ||

| int | errors | ||

| ) |

Definition at line 3914 of file ComputePme.C.

References PmeGrid::block1, PmeGrid::dim2, PmeGrid::dim3, endi(), PmeGridMsg::fgrid, iERROR(), iout, PmeGrid::K2, PmeGrid::K3, PmeGridMsg::lattice, PmeGridMsg::len, NAMD_bug(), ComputePmeUtil::numGrids, PmeGrid::order, PME_GRID_PRIORITY, PRIORITY_SIZE, PmeGridMsg::qgrid, PmeGridMsg::sequence, SET_PRIORITY, PmeGridMsg::sourceNode, PmeGridMsg::start, PmeGridMsg::zlist, and PmeGridMsg::zlistlen.

Referenced by sendData(), and NodePmeMgr::sendDataHelper().

◆ sendPencils()

| void ComputePmeMgr::sendPencils | ( | Lattice & | lattice, |

| int | sequence | ||

| ) |

Definition at line 3809 of file ComputePme.C.

References PmeGrid::block1, PmeGrid::block2, PmeGrid::dim2, endi(), ijpair::i, iERROR(), iout, ijpair::j, PmeGrid::K1, PmeGrid::K2, ComputePmeUtil::numGrids, sendDataHelper_lattice, sendDataHelper_sequence, sendDataHelper_sourcepe, sendPencilsPart(), and NodePmeMgr::zm.

Referenced by chargeGridReady().

◆ sendPencilsHelper()

| void ComputePmeMgr::sendPencilsHelper | ( | int | iter | ) |

◆ sendPencilsPart()

| void ComputePmeMgr::sendPencilsPart | ( | int | first, |

| int | last, | ||

| Lattice & | lattice, | ||

| int | sequence, | ||

| int | sourcepe | ||

| ) |

Definition at line 3654 of file ComputePme.C.

References PmeGrid::block1, PmeGrid::block2, PmeGridMsg::destElem, PmeGrid::dim2, PmeGrid::dim3, PmeGridMsg::fgrid, PmeGridMsg::hasData, ijpair::i, ijpair::j, PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, PmeGridMsg::lattice, PmeGridMsg::len, NAMD_bug(), ComputePmeUtil::numGrids, PmeGrid::order, PME_GRID_PRIORITY, PRIORITY_SIZE, PmeGridMsg::qgrid, PmeGridMsg::sequence, SET_PRIORITY, PmeGridMsg::sourceNode, PmeGridMsg::start, PmeGridMsg::zlist, PmeGridMsg::zlistlen, and NodePmeMgr::zm.

Referenced by sendPencils(), and NodePmeMgr::sendPencilsHelper().

◆ sendTrans()

| void ComputePmeMgr::sendTrans | ( | void | ) |

Definition at line 1967 of file ComputePme.C.

References CKLOOP_CTRL_PME_SENDTRANS, Node::Object(), PmeSlabSendTrans(), sendTransSubset(), Node::simParameters, and SimParameters::useCkLoop.

◆ sendTransBarrier()

| void ComputePmeMgr::sendTransBarrier | ( | void | ) |

Definition at line 1952 of file ComputePme.C.

◆ sendTransSubset()

| void ComputePmeMgr::sendTransSubset | ( | int | first, |

| int | last | ||

| ) |

Definition at line 1983 of file ComputePme.C.

References PmeGrid::dim3, fwdSharedTrans(), PmeGrid::K2, PmeTransMsg::lattice, NodePmeInfo::npe, ComputePmeUtil::numGrids, PmeTransMsg::nx, LocalPmeInfo::nx, LocalPmeInfo::ny_after_transpose, NodePmeInfo::pe_start, PME_TRANS_PRIORITY, PRIORITY_SIZE, PmeTransMsg::qgrid, NodePmeInfo::real_node, PmeTransMsg::sequence, SET_PRIORITY, PmeTransMsg::sourceNode, PmeTransMsg::x_start, LocalPmeInfo::x_start, and LocalPmeInfo::y_start_after_transpose.

Referenced by PmeSlabSendTrans(), and sendTrans().

◆ sendUngrid()

| void ComputePmeMgr::sendUngrid | ( | void | ) |

Definition at line 2404 of file ComputePme.C.

References CKLOOP_CTRL_PME_SENDUNTRANS, ComputePmeUtil::numGrids, Node::Object(), PmeSlabSendUngrid(), sendUngridSubset(), Node::simParameters, and SimParameters::useCkLoop.

◆ sendUngridSubset()

| void ComputePmeMgr::sendUngridSubset | ( | int | first, |

| int | last | ||

| ) |

Definition at line 2420 of file ComputePme.C.

References PmeGrid::dim3, PmeGridMsg::fgrid, PmeGridMsg::len, ComputePmeUtil::numGrids, PME_OFFLOAD_UNGRID_PRIORITY, PME_UNGRID_PRIORITY, PmeGridMsg::qgrid, SET_PRIORITY, PmeGridMsg::sourceNode, PmeGridMsg::start, PmeGridMsg::zlist, and PmeGridMsg::zlistlen.

Referenced by PmeSlabSendUngrid(), and sendUngrid().

◆ sendUntrans()

| void ComputePmeMgr::sendUntrans | ( | void | ) |

Definition at line 2218 of file ComputePme.C.

References CKLOOP_CTRL_PME_SENDUNTRANS, PmeEvirMsg::evir, ComputePmeUtil::numGrids, Node::Object(), PME_UNGRID_PRIORITY, PmeSlabSendUntrans(), PRIORITY_SIZE, sendUntransSubset(), SET_PRIORITY, Node::simParameters, and SimParameters::useCkLoop.

◆ sendUntransSubset()

| void ComputePmeMgr::sendUntransSubset | ( | int | first, |

| int | last | ||

| ) |

Definition at line 2245 of file ComputePme.C.

References PmeGrid::dim3, fwdSharedUntrans(), PmeGrid::K2, NodePmeInfo::npe, ComputePmeUtil::numGrids, LocalPmeInfo::nx, PmeUntransMsg::ny, LocalPmeInfo::ny_after_transpose, NodePmeInfo::pe_start, PME_UNTRANS_PRIORITY, PRIORITY_SIZE, PmeUntransMsg::qgrid, NodePmeInfo::real_node, SET_PRIORITY, PmeUntransMsg::sourceNode, LocalPmeInfo::x_start, PmeUntransMsg::y_start, and LocalPmeInfo::y_start_after_transpose.

Referenced by PmeSlabSendUntrans(), and sendUntrans().

◆ submitReductions()

| void ComputePmeMgr::submitReductions | ( | ) |

Definition at line 4297 of file ComputePme.C.

References ComputePmeUtil::alchDecouple, ComputePmeUtil::alchFepOn, ComputePmeUtil::alchOn, ComputePmeUtil::alchThermIntOn, SubmitReduction::item(), ComputePmeUtil::lesFactor, ComputePmeUtil::lesOn, ComputePmeUtil::LJPMEOn, WorkDistrib::messageEnqueueWork(), NAMD_bug(), ComputePmeUtil::numGrids, Node::Object(), ComputePmeUtil::pairOn, REDUCTION_ELECT_ENERGY_PME_TI_1, REDUCTION_ELECT_ENERGY_PME_TI_2, REDUCTION_ELECT_ENERGY_SLOW, REDUCTION_ELECT_ENERGY_SLOW_F, REDUCTION_LJ_ENERGY_SLOW, REDUCTION_STRAY_CHARGE_ERRORS, ResizeArray< Elem >::resize(), Node::simParameters, simParams, ResizeArray< Elem >::size(), and SubmitReduction::submit().

Referenced by ComputePme::doWork(), and recvRecipEvir().

◆ ungridCalc()

| void ComputePmeMgr::ungridCalc | ( | void | ) |

Definition at line 2554 of file ComputePme.C.

References a_data_dev, cuda_errcheck(), CUDA_EVENT_ID_PME_COPY, CUDA_EVENT_ID_PME_KERNEL, CUDA_EVENT_ID_PME_TICK, deviceCUDA, end_forces, EVENT_STRIDE, f_data_dev, f_data_host, forces_count, forces_done_count, forces_time, DeviceCUDA::getDeviceID(), PmeGrid::K1, PmeGrid::K2, PmeGrid::K3, WorkDistrib::messageEnqueueWork(), PmeGrid::order, pmeComputes, ResizeArray< Elem >::size(), this_pe, and ungridCalc().

Referenced by ungridCalc().

Friends And Related Function Documentation

◆ ComputePme

|

friend |

Definition at line 385 of file ComputePme.C.

◆ NodePmeMgr

|

friend |

Definition at line 386 of file ComputePme.C.

Member Data Documentation

◆ a_data_dev

| float* ComputePmeMgr::a_data_dev |

Definition at line 447 of file ComputePme.C.

Referenced by cuda_submit_charges(), ComputePme::doWork(), and ungridCalc().

◆ a_data_host

| float* ComputePmeMgr::a_data_host |

Definition at line 446 of file ComputePme.C.

Referenced by cuda_submit_charges(), and ComputePme::doWork().

◆ chargeGridSubmittedCount

| int ComputePmeMgr::chargeGridSubmittedCount |

Definition at line 472 of file ComputePme.C.

Referenced by chargeGridSubmitted(), ComputePmeMgr(), initialize_computes(), and sendChargeGridReady().

◆ charges_time

| double ComputePmeMgr::charges_time |

Definition at line 458 of file ComputePme.C.

Referenced by cuda_check_pme_charges(), and cuda_submit_charges().

◆ check_charges_count

| int ComputePmeMgr::check_charges_count |

Definition at line 460 of file ComputePme.C.

Referenced by ComputePmeMgr(), and cuda_check_pme_charges().

◆ check_forces_count

| int ComputePmeMgr::check_forces_count |

Definition at line 461 of file ComputePme.C.

Referenced by ComputePmeMgr(), and cuda_check_pme_forces().

◆ cuda_atoms_alloc

| int ComputePmeMgr::cuda_atoms_alloc |

Definition at line 451 of file ComputePme.C.

Referenced by ComputePmeMgr(), and ComputePme::doWork().

◆ cuda_atoms_count

| int ComputePmeMgr::cuda_atoms_count |

Definition at line 450 of file ComputePme.C.

Referenced by ComputePmeMgr(), cuda_submit_charges(), ComputePme::doWork(), and ComputePme::initialize().

◆ cuda_busy

|

static |

Definition at line 470 of file ComputePme.C.

Referenced by ComputePme::doWork().

◆ cuda_lock

|

static |

Definition at line 452 of file ComputePme.C.

Referenced by ComputePmeMgr(), ComputePme::doWork(), initialize_computes(), and recvAck().

◆ cuda_submit_charges_deque

|

static |

Definition at line 469 of file ComputePme.C.

Referenced by ComputePme::doWork().

◆ end_charges

| cudaEvent_t ComputePmeMgr::end_charges |

Definition at line 454 of file ComputePme.C.

Referenced by chargeGridSubmitted(), ComputePmeMgr(), and cuda_check_pme_charges().

◆ end_forces

| cudaEvent_t* ComputePmeMgr::end_forces |

Definition at line 455 of file ComputePme.C.

Referenced by ComputePmeMgr(), cuda_check_pme_forces(), and ungridCalc().

◆ f_data_dev

| float* ComputePmeMgr::f_data_dev |

Definition at line 449 of file ComputePme.C.

Referenced by ungridCalc().

◆ f_data_host

| float* ComputePmeMgr::f_data_host |

Definition at line 448 of file ComputePme.C.

Referenced by ungridCalc().

◆ fftw_plan_lock

|

static |

Definition at line 442 of file ComputePme.C.

Referenced by ComputePmeMgr(), PmeZPencil::fft_init(), PmeYPencil::fft_init(), PmeXPencil::fft_init(), initialize(), PmeZPencil::node_process_grid(), PmeZPencil::node_process_untrans(), NodePmeMgr::registerXPencil(), NodePmeMgr::registerYPencil(), NodePmeMgr::registerZPencil(), and ~ComputePmeMgr().

◆ forces_count

| int ComputePmeMgr::forces_count |

Definition at line 456 of file ComputePme.C.

Referenced by cuda_check_pme_forces(), and ungridCalc().

◆ forces_done_count

| int ComputePmeMgr::forces_done_count |

Definition at line 457 of file ComputePme.C.

Referenced by cuda_check_pme_forces(), and ungridCalc().

◆ forces_time

| double ComputePmeMgr::forces_time |

Definition at line 459 of file ComputePme.C.

Referenced by cuda_check_pme_forces(), and ungridCalc().

◆ master_pe

| int ComputePmeMgr::master_pe |

Definition at line 462 of file ComputePme.C.

Referenced by chargeGridSubmitted(), initialize_computes(), recvAck(), and sendChargeGridReady().

◆ pmeComputes

| ResizeArray<ComputePme*> ComputePmeMgr::pmeComputes |

Definition at line 482 of file ComputePme.C.

Referenced by chargeGridReady(), cuda_check_pme_forces(), ComputePme::doWork(), getComputes(), ComputePme::noWork(), recvRecipEvir(), sendChargeGridReady(), and ungridCalc().

◆ pmemgr_lock

| CmiNodeLock ComputePmeMgr::pmemgr_lock |

Definition at line 443 of file ComputePme.C.

Referenced by ComputePmeMgr(), and ~ComputePmeMgr().

◆ saved_lattice

| Lattice* ComputePmeMgr::saved_lattice |

Definition at line 475 of file ComputePme.C.

Referenced by chargeGridSubmitted(), and recvChargeGridReady().

◆ saved_sequence

| int ComputePmeMgr::saved_sequence |

Definition at line 476 of file ComputePme.C.

Referenced by chargeGridSubmitted(), cuda_check_pme_charges(), cuda_check_pme_forces(), and recvChargeGridReady().

◆ sendDataHelper_errors

| int ComputePmeMgr::sendDataHelper_errors |

Definition at line 401 of file ComputePme.C.

Referenced by sendData(), and NodePmeMgr::sendDataHelper().

◆ sendDataHelper_lattice

| Lattice* ComputePmeMgr::sendDataHelper_lattice |

Definition at line 398 of file ComputePme.C.

Referenced by sendData(), NodePmeMgr::sendDataHelper(), sendPencils(), and NodePmeMgr::sendPencilsHelper().

◆ sendDataHelper_sequence

| int ComputePmeMgr::sendDataHelper_sequence |

Definition at line 399 of file ComputePme.C.

Referenced by sendData(), NodePmeMgr::sendDataHelper(), sendPencils(), and NodePmeMgr::sendPencilsHelper().

◆ sendDataHelper_sourcepe

| int ComputePmeMgr::sendDataHelper_sourcepe |

Definition at line 400 of file ComputePme.C.

Referenced by sendData(), NodePmeMgr::sendDataHelper(), sendPencils(), and NodePmeMgr::sendPencilsHelper().

◆ this_pe

| int ComputePmeMgr::this_pe |

Definition at line 463 of file ComputePme.C.

Referenced by ComputePmeMgr(), and ungridCalc().

The documentation for this class was generated from the following file:

1.8.14

1.8.14